Microsoft

During the course of my internship at Microsoft as a Software for Hardware intern, I worked on fully automating the Surface devices keyboard and touchpad latency test. I

implemented robot movement, camera control automation, computer vision, and data analysis to increase testing

efficiency by over 70%, as well as increasing the reliability of latency data, decreasing variations by 19%. I additionally looked into methods of running latency testing fully on a testing device, speaking

with teams across Surface to understand various logging softwares, the latency stack-up, and timing synchronization.

The previous method of latency testing was fully manual and would take up to 4 hours for a full keyboard and touchpad testing procedure. A robot arm would be used to either

press a key or move the touchpad, and the operator would have to precisely time moving the robot and triggering two high-speed cameras. One camera would be pointed at

the keyboard/touchpad to determine when the robot arm touches it, and one would be pointed at the display to determine when either a key would show up on screen or

the laptop cursor would move. After running one test, the operator would have to scrub through both videos, determine at which frame they believed the keypress or touchpad

swipe started, and what frame the display response occurred. They would then have to subtract the frame values and input the perceived latency into a spreadsheet. This method

was very time intensive and also resulted in unreliable, subjective data, as different operators had different perceptions of when the touch first occurred and when the display

output occurred, as a display rate meant any change would start off very faint.

To try to improve the testing workflow, I first implemented a computer vision algorithm to determine when the robot first touches the device and when the display outputs a response,

as well as implementing character recognition. To determine when the robot touch occurs, I soldered a small LED board that connected to an external power supply. I used the robot finger

pressing down upon the touchpad or key as a hardware switch, turning the LED on as the finger presses down on the device. I used C# to implement detecting when the LED turns on and

an associated change in the screen by evaulating the change in brightness of the pixels in bounding boxes I defined. I filtered the data, using a mean average as well as

creating various timing and repetition thresholds, to ensure that spurious changes are not

recognized including the LED flickering or flickering in the video footage due to the lights within the test setup. I went through various iterations of algorithms to try and create an

implementation that ran in under two minutes to analyze over 3700 frames. I used Tesseract to implement OCR, or optical character recognition,

to ensure the correct and expected key is being pressed.

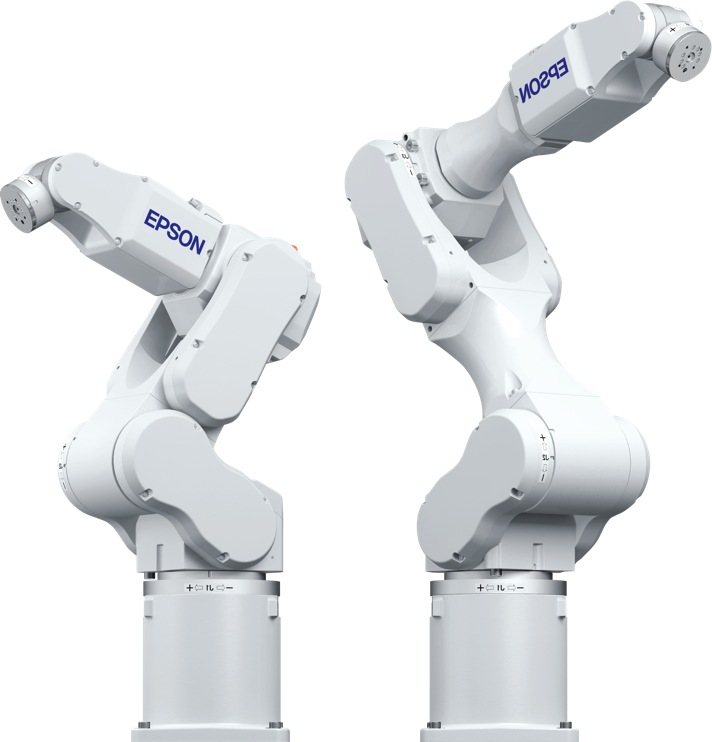

To automate the robot movement, I wrote C# scripts that interfaced with an in-house library, in turn communicating with the SPELL library used to control the Epson 6-axis robots. I set various coordinate locations

and speed settings for each of our testing variations. Camera configuration and automation required the use of Selenium and OpenQA, in which I automated cursor movements and file save setting inputs

to allow the full video capture and analysis workflow to be autonomous. I brought all these scripts together in a .NET framework within C# and worked on configuring the project

to align with my team's existing Schism framework, which allowed much more flexibility in developing new test procedures and enabled hardware-less testing. We found that the automated testing decreased

testing time by over 70%, and also found a great decrease in the variability of the latency data we were reading, as operator subjectivity added over 36% variation even within the same

test run.